Scientific Achievement

A team of Foundry staff and users demonstrated an equivalence between two families of neural-network learning dynamics that are usually considered to be fundamentally different.

Significance and Impact

This work is the first demonstration of This work provides fundamental understanding of how neural networks learn, and sets the stage for the design of new deep-learning learning algorithms that carry out gradient descent without explicit evaluation of gradients.

Research Details

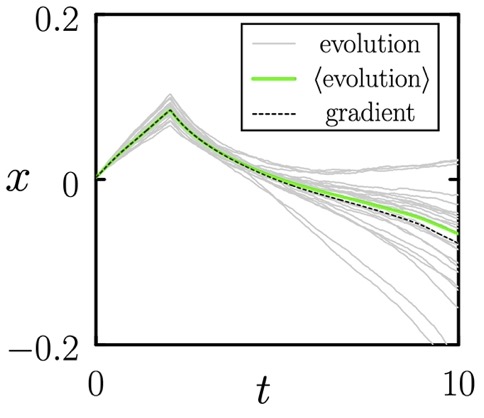

- In machine learning, neural networks are usually trained by gradient-based methods (e.g. backpropagation), and sometimes by non-gradient-based methods (e.g. evolution, black-box methods).

- These methods are usually considered to be fundamentally different. However, using an analogy developed in the physics literature that connects different forms of molecular dynamics, the authors demonstrated a fundamental connection between these learning algorithms.

- This demonstration provides a new way of viewing distinct forms of machine-learning methods, confirms the deep connection between statistical mechanics and deep learning, and paves the way for new learning algorithms.